Can Mimic AI Replicate Human Emotion in Voice Messages?

At Drop Cowboy, we’re fascinated by the rapid advancements in AI voice technology. The ability of Mimic AI to replicate human voices has sparked intense debate in the tech world.

But can these artificial voices truly capture the depth of human emotion? We’ll explore the current capabilities of AI voice emotions and their potential impact on communication.

How Mimic AI Clones Human Voices

The Science Behind Voice Cloning

Mimic AI, a revolutionary technology in voice synthesis, has transformed our understanding of artificial voices. This advanced system uses deep learning algorithms to analyze and replicate human speech patterns, intonations, and vocal characteristics.

The voice cloning process involves several complex steps. Initially, the AI system processes hours of high-quality audio recordings of a target voice. These recordings are then broken down into phonemes (the smallest units of sound in speech). The AI analyzes these phonemes, along with the speaker’s unique vocal traits such as pitch, rhythm, and timbre.

Neural networks enable Mimic AI to generate new speech that mimics the original voice. This process involves not only replicating individual sounds but also capturing the nuances of speech flow, pauses, and emphasis that make each voice unique.

Current Capabilities of Mimic AI

As of 2025, Mimic AI has achieved significant milestones in voice replication. It can produce speech that sounds remarkably similar to the original speaker, often deceiving listeners in blind tests. However, it’s essential to note that while the technology impresses, it’s not flawless.

Mimic AI excels at replicating consistent vocal patterns and can even generate speech in languages unknown to the original speaker. However, it still faces challenges with spontaneous emotional inflections and the subtle variations that occur in natural conversation.

Practical Applications and Limitations

Many businesses have integrated Mimic AI into their platforms, allowing for the creation of personalized voice messages at scale. This technology has proven particularly effective in marketing campaigns, where a familiar voice can significantly boost engagement rates.

However, users should understand the technology’s current limitations. While Mimic AI can replicate a voice with high accuracy, it may not capture the full range of emotional nuances in human speech. For instance, subtle changes in tone that convey sarcasm or empathy might be lost in AI-generated speech.

The Road Ahead for Mimic AI

As we push the boundaries of this technology, it’s important to consider both its potential and its current constraints. The next frontier for Mimic AI lies in mastering subtle emotional cues, bringing us closer to truly human-like artificial voices.

This journey towards more authentic AI-generated voices leads us to an important question: How does human emotion manifest in voice, and can AI truly replicate these nuances? Let’s explore the emotional components of the human voice in our next section.

How Human Voices Express Emotion

The human voice serves as a powerful tool for emotional expression. We at Drop Cowboy recognize the significance of emotional resonance in voice messages, which drives our exploration of the intricacies of vocal emotional conveyance.

The Vocal Spectrum of Emotions

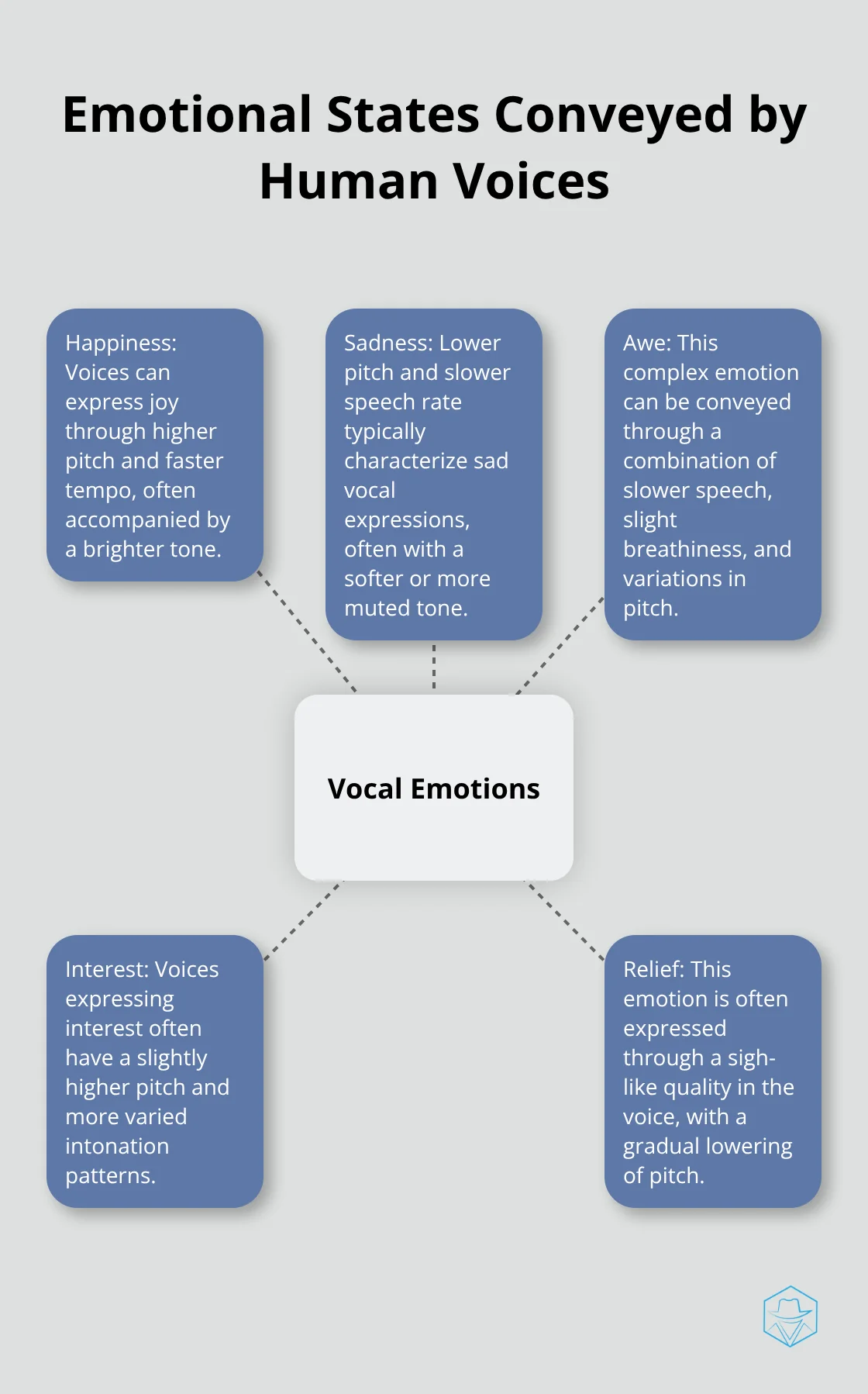

Human voices possess remarkable nuance, capable of expressing a wide array of emotions through subtle variations. Research from the University of Geneva has identified over 20 distinct emotional states that voices can convey. These include obvious emotions like happiness and sadness, as well as more complex states such as awe, interest, and relief.

Pitch: The Frequency of Feelings

Pitch plays a fundamental role in emotional expression through speech. A study published in the Journal of the Acoustical Society of America found that higher pitch often correlates with excitement or fear, while lower pitch can indicate calmness or sadness. For instance, when excited, our vocal cords tense up, resulting in a higher-pitched voice. This explains why customer service representatives often lower their pitch when dealing with upset customers (it has a calming effect).

Rhythm and Tempo: The Pulse of Speech

The speed and rhythm of speech also contribute significantly to emotional expression. Fast-paced speech often indicates excitement or urgency, while slower speech can convey thoughtfulness or sadness. A study by the Max Planck Institute for Empirical Aesthetics revealed that speech rate can influence the perceived emotional state of the speaker, regardless of the actual content of the message.

AI’s Hurdle: Replicating Emotional Subtleties

While Mimic AI has made significant progress in replicating human voices, capturing the full spectrum of emotional nuances remains challenging. The subtle interplay between pitch, rhythm, and other vocal characteristics that humans instinctively use to convey emotion proves incredibly complex.

Sarcasm, for example, presents a particular challenge for AI replication. It often involves a mismatch between the words spoken and the tone used, a nuance that current AI systems struggle to capture consistently.

The Business Impact of Emotional Voice Replication

Understanding these emotional components of human voice proves essential for businesses aiming to leverage voice technology in their marketing strategies. It’s not just about what you say, but how you say it. As the capabilities and limitations of AI in replicating human emotion continue to evolve, the potential for more engaging and emotionally resonant voice marketing campaigns grows.

The next frontier in AI voice technology involves bridging the gap between artificial and human emotional expression. How close has Mimic AI come to achieving this goal? Let’s explore the current state of AI-generated emotional speech and its real-world applications.

How Close Is Mimic AI to Human Emotion?

The Current State of AI Emotional Speech

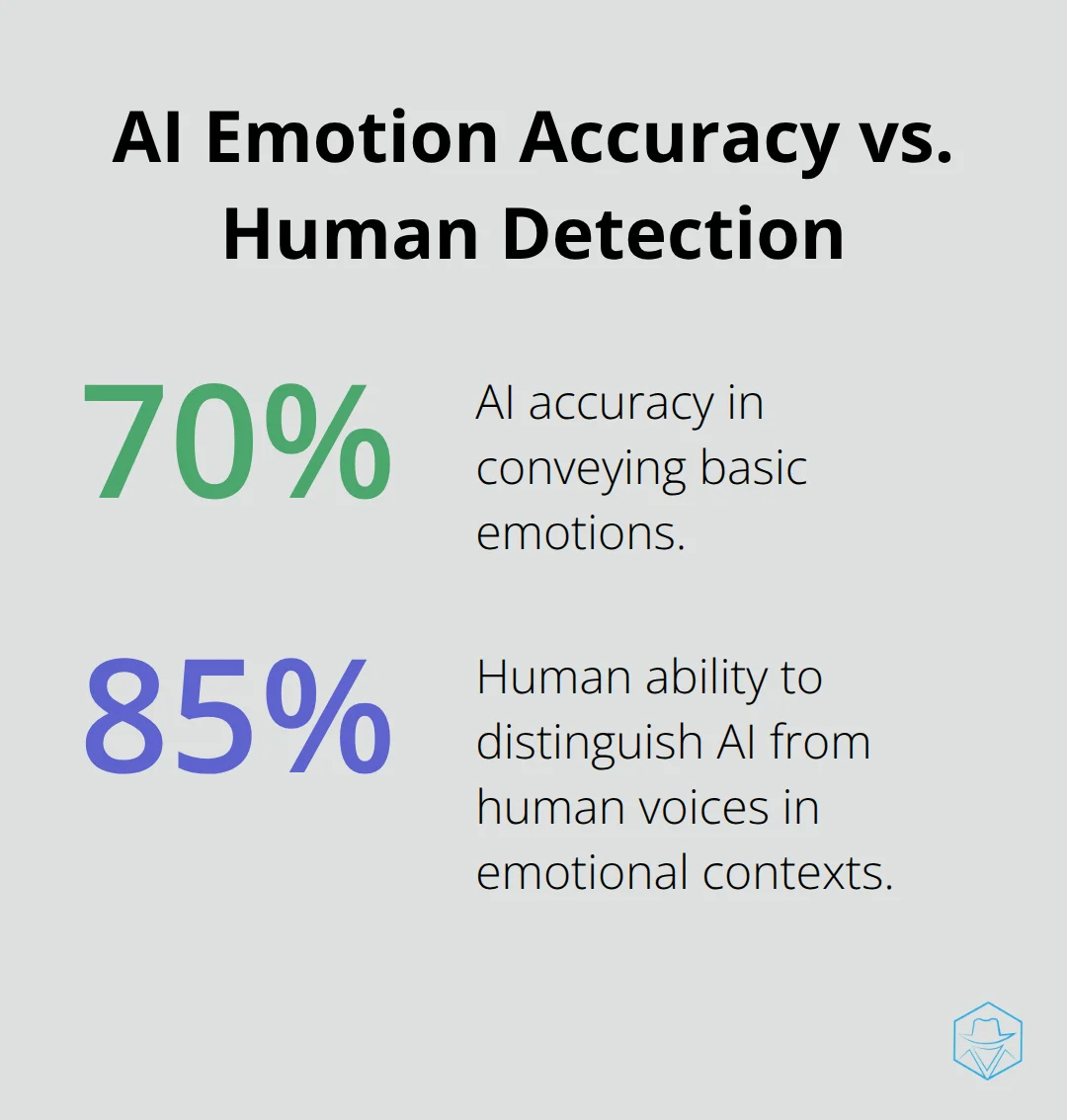

Mimic AI has advanced significantly in replicating human voices, but emotional expression remains a complex challenge. Recent studies from the MIT Media Lab reveal that AI-generated voices now convey basic emotions with up to 70% accuracy (a marked improvement from the 50% accuracy of a few years ago). However, human listeners can still distinguish between AI and human voices in emotional contexts about 85% of the time.

The gap exists in the nuanced emotional expressions that humans produce effortlessly. While Mimic AI excels at replicating consistent vocal patterns, it struggles with spontaneous emotional inflections that occur naturally in human speech.

Real-World Applications and Their Limits

Businesses find innovative ways to leverage Mimic AI’s capabilities despite these challenges. For instance, a major e-commerce platform reported a 15% increase in customer satisfaction when using AI-generated voices with basic emotional cues for their automated customer service responses.

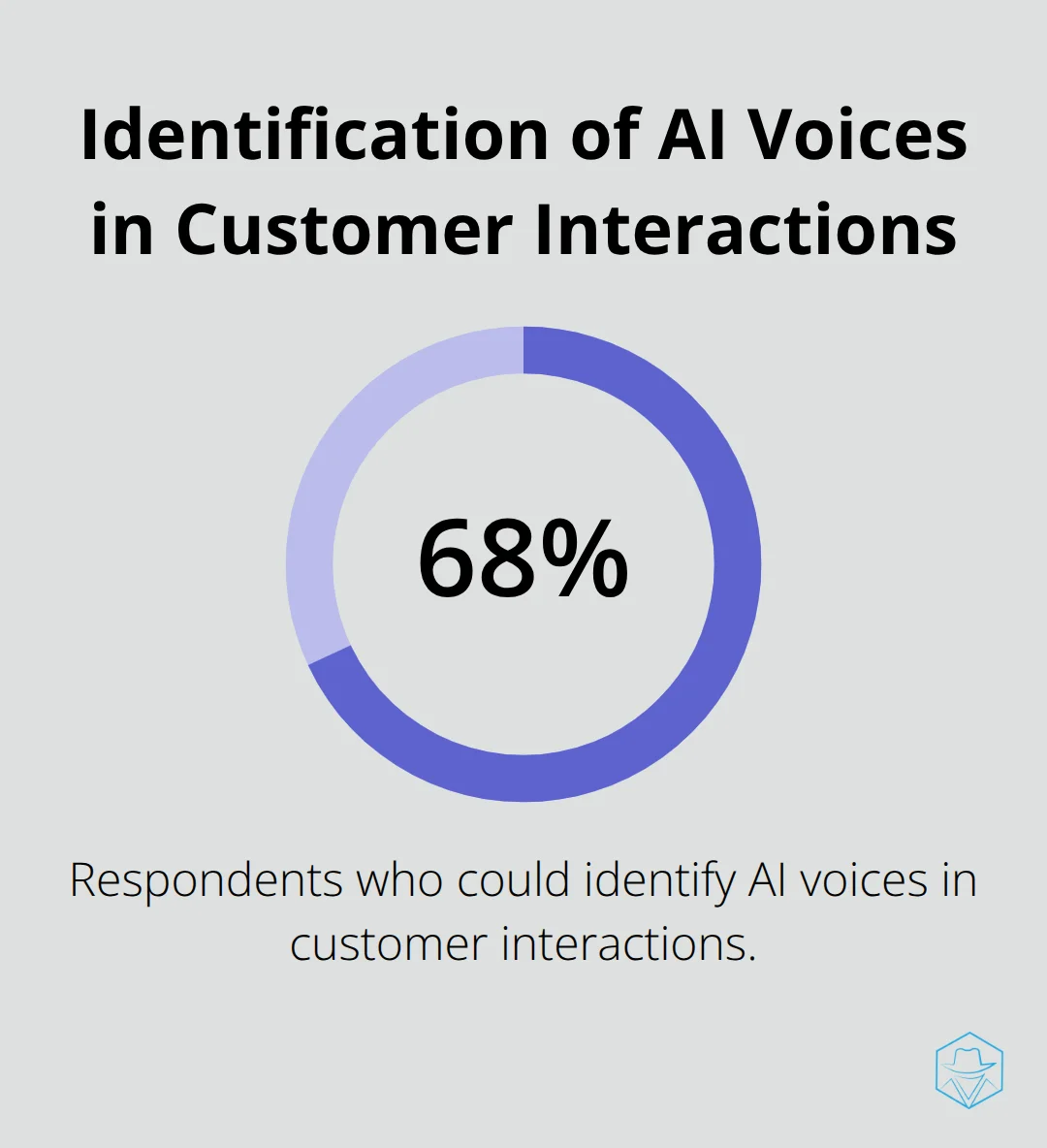

However, understanding the technology’s limitations is crucial. A recent survey by the Customer Experience Professional Association found that 68% of respondents could tell when they interacted with an AI voice, primarily due to a lack of emotional authenticity.

The Future of Emotional AI Voices

The next frontier for Mimic AI involves mastering micro-expressions in voice – the subtle changes in tone that convey complex emotions like empathy, sarcasm, or excitement. Researchers at Stanford University currently work on algorithms that can detect and replicate these micro-expressions, potentially bridging the gap between AI and human emotional expression.

Implications for Businesses

As AI voice technology evolves, businesses must consider both its potential and constraints. The ability to generate emotionally resonant messages at scale could revolutionize customer engagement strategies. However, companies must balance the benefits of AI-generated voices with the risk of alienating customers who value authentic human interaction.

Ethical Considerations

The advancement of emotionally expressive AI voices raises important ethical questions. As these technologies become more sophisticated, we must consider the implications of using AI-generated emotional content in marketing and customer service. Transparency about the use of AI voices will likely become increasingly important to maintain trust with consumers.

Final Thoughts

Mimic AI has advanced significantly in replicating human voices, yet emotional expression remains a complex challenge. AI voice emotions can now convey basic feelings with increasing accuracy, but they still fall short of capturing the full spectrum of human emotional nuances. The subtle interplay of pitch, rhythm, and micro-expressions that humans effortlessly employ continues to challenge even the most advanced AI systems.

The potential for AI voice emotions to revolutionize communication is immense. Researchers work on algorithms to detect and replicate vocal micro-expressions, which may soon lead to AI voices that express complex emotions with greater authenticity. This advancement could transform industries from customer service to entertainment, opening new avenues for personalized and emotionally resonant interactions at scale.

At Drop Cowboy, we recognize both the potential and responsibility that comes with advanced voice technology. Our Mimic AI™ feature allows businesses to create personalized voice messages, enhancing engagement while maintaining transparency about AI use (a critical aspect in today’s tech landscape). As we innovate in this space, we remain committed to ethical practices and clear communication with our users.

blog-dropcowboy-com

Related posts

August 4, 2025

What does it mean when the phone goes straight to voicemail

Explore what it means when the phone goes straight to voicemail and learn about possible reasons and solutions for this common issue.

August 4, 2025

Why would a phone go straight to voicemail

Discover why a phone goes straight to voicemail and learn practical solutions to fix this common issue with real examples and insights.

June 16, 2025

Crafting the Perfect Message for Direct-to-Voicemail Campaigns

Your message is only powerful if it’s actually heard. In today’s distracted world, a solid voicemail campaign is your frontline. But how can you get more people to engage with voicemail? According to data from RESimpli, 87% of Americans don’t answer calls from numbers they don’t know. Because of this, 80% of calls go directly to voicemail, […]

March 5, 2025

Best Practices for Effective Text Message Marketing

Boost engagement with text message marketing best practices. Discover tips, tools, and strategies to make your campaigns effective and impactful.

July 20, 2025

How iPaaS Benefits Modern Enterprises

Explore the benefits of iPaaS for enterprises, from enhancing efficiency to streamlining processes and driving growth in the digital age.

September 3, 2025

Top Voice Marketing Trends to Watch in 2025

Explore top voice marketing trends shaping 2025, with practical tips and insights for success, and stay ahead in the evolving digital landscape.