Is Your AI Voice Clone Indistinguishable from the Real You? [2025]

![Is Your AI Voice Clone Indistinguishable from the Real You? [2025]](/blog/wp-content/uploads/emplibot/voice-authenticity-hero-1756858006.webp)

AI voice cloning has made remarkable strides in recent years, pushing the boundaries of voice authenticity. At Drop Cowboy, we’ve witnessed firsthand how this technology is reshaping communication across industries.

As AI-generated voices become increasingly lifelike, it’s crucial to understand the factors that contribute to their realism and how to evaluate their quality. This blog post explores the current state of AI voice cloning and its potential impact on our digital interactions.

How Does AI Voice Cloning Work?

The Science Behind Voice Cloning

AI voice cloning transforms the way we interact with technology and each other. This technology uses deep learning algorithms to analyze and replicate human speech patterns, creating synthetic voices that sound remarkably real.

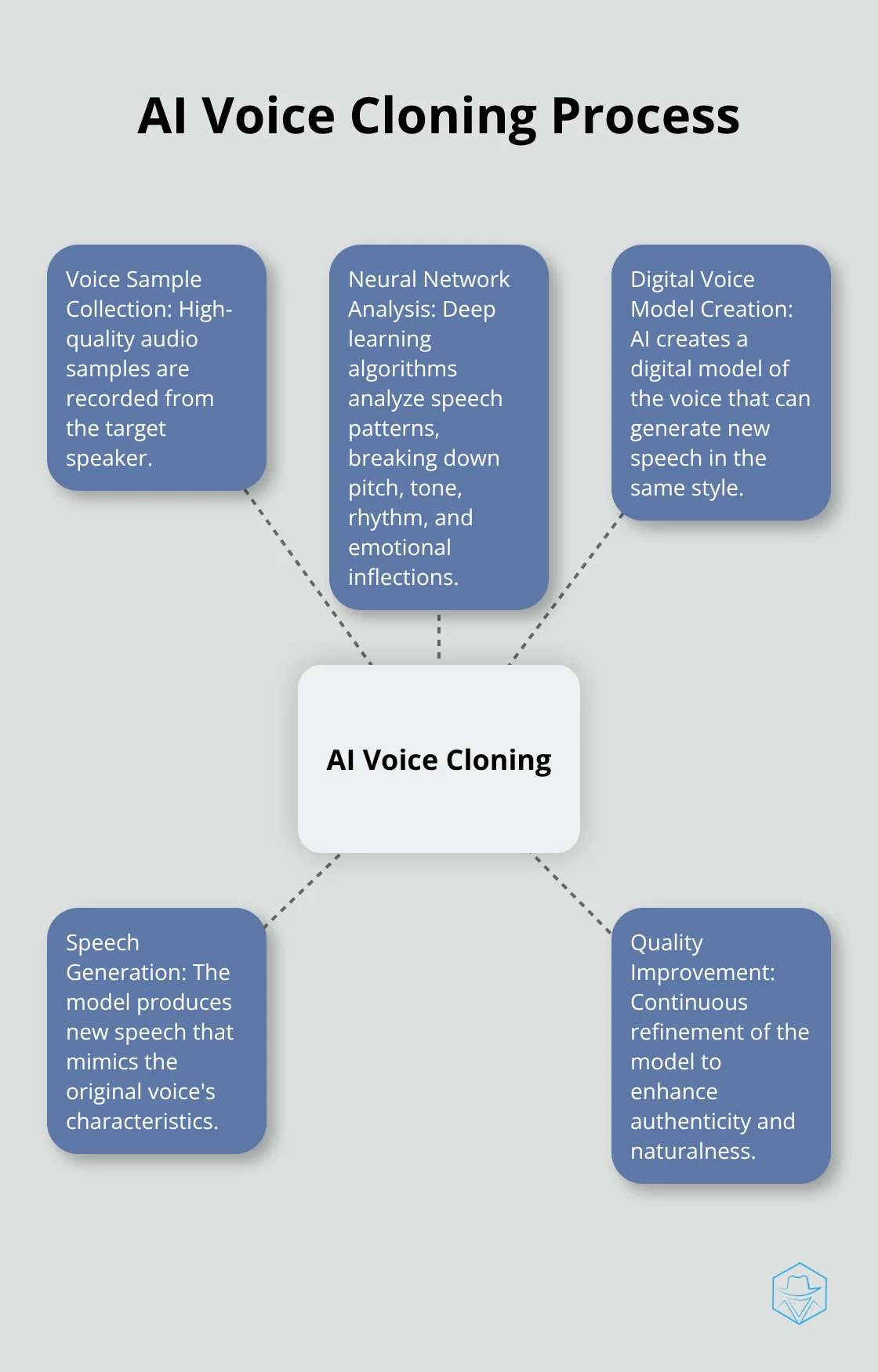

The process starts with the collection of high-quality voice samples from a target speaker. Neural networks then break down the voice into its fundamental components (pitch, tone, rhythm, and emotional inflections). The AI learns to mimic these elements, creating a digital model of the voice that can generate new speech in the same style.

A 2024 study by the MIT Media Lab revealed that modern AI voice cloning systems can create a convincing replica with as little as 5 minutes of recorded speech. This marks a significant improvement from just a few years ago when hours of audio were required.

Industry Leaders Pushing Boundaries

Several companies lead the charge in AI voice cloning technology. Descript has made waves with its Overdub feature, which allows content creators to edit audio by simply typing. Respeecher has worked on high-profile projects, including the recreation of James Earl Jones’s voice for Darth Vader in recent Star Wars productions.

Drop Cowboy has also entered this space with Mimic AI™ technology, enabling businesses to create personalized voice messages at scale. Our system allows for dynamic personalization, ensuring each message feels tailored to the recipient.

Recent Breakthroughs in Voice Synthesis

The quality of AI-generated voices has improved dramatically in recent years. A 2025 report from the Audio Engineering Society noted that the latest voice synthesis models can now accurately replicate micro-expressions and subtle emotional cues that were previously only achievable by human voice actors.

One significant advancement is the ability to maintain voice consistency across different languages. This breakthrough has enormous implications for global businesses, allowing them to create multilingual content without the need for multiple voice actors.

Ethical Considerations

As AI voice cloning technology continues to evolve, it’s important to consider the ethical implications. The potential for misuse (such as impersonation or fraud) exists, and industry leaders must work together to establish guidelines for responsible use.

The Future of Voice Cloning

The potential applications for AI voice cloning are vast and exciting. From personalized virtual assistants to revolutionized dubbing in the film industry, we’re only scratching the surface of what’s possible. As we move forward, it’s essential to balance innovation with responsible development and use of this powerful technology.

With the basics of AI voice cloning covered, let’s explore the factors that affect the authenticity of these synthetic voices and how closely they can mimic human speech.

What Makes AI Voices Sound Real?

Mastering Vocal Nuances

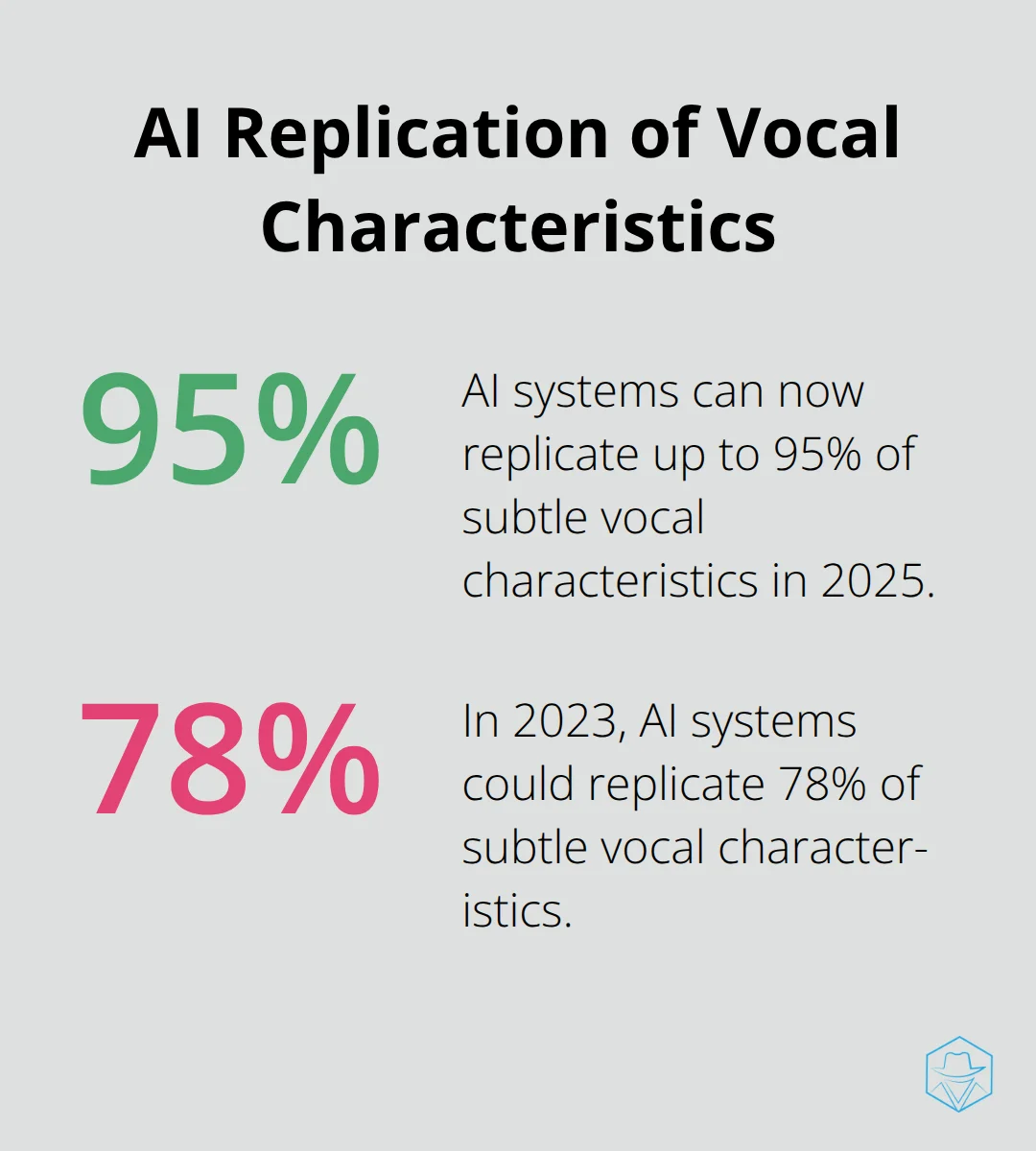

The human voice contains intricate variations in pitch, tone, and rhythm that define individual speech patterns. Advanced AI models now analyze these micro-patterns to create more realistic voice clones. A 2025 study by the University of Cambridge revealed that AI systems can replicate up to 95% of these subtle vocal characteristics (a significant improvement from 78% in 2023).

To achieve this level of accuracy, voice cloning systems need high-quality audio samples. Professional recording equipment in a controlled environment captures the clearest representation of the target voice.

Emotional Intelligence in Synthetic Speech

Replicating emotional tone and inflection presents a significant challenge in voice cloning. Humans naturally vary their speech patterns based on context and emotion, and AI must learn to do the same.

Recent advancements in emotional AI have made substantial progress in this area. The VocalEQ project at Stanford University developed algorithms that detect and replicate emotional states in speech with 87% accuracy. This technology allows AI voices to convey excitement, empathy, or concern, which makes interactions feel more natural and engaging.

Environmental Authenticity

Background noise and environmental factors play a vital role in the perceived authenticity of a voice. Completely pristine audio can sometimes sound artificial, as our brains expect a certain level of ambient sound in real-world conversations.

Some voice cloning systems now incorporate adaptive background noise modeling to address this issue. This technology adds subtle, context-appropriate ambient sounds to synthetic speech, which makes it sound more natural in various settings.

Navigating Accents and Languages

Language and accent considerations become essential when creating globally applicable voice clones. AI systems must replicate not only the words being spoken but also the unique cadence and pronunciation of different dialects and languages.

The polyglot AI developed by DeepMind in 2024 can switch between 100 different languages and accents while maintaining the core characteristics of the original voice. This breakthrough has enormous implications for businesses operating in multiple markets, as it allows them to create localized content without losing brand consistency.

As AI voice cloning technology continues to evolve, the line between synthetic and human voices becomes increasingly blurred. The next step involves rigorous testing and evaluation to determine just how close these AI-generated voices come to their human counterparts.

How Accurate Are AI Voice Clones?

At Drop Cowboy, we push the boundaries of AI voice technology. But how do we measure the accuracy of these synthetic voices? Let’s explore the methods and tools used to evaluate AI voice clones and examine some real-world applications.

The Turing Test for Voice

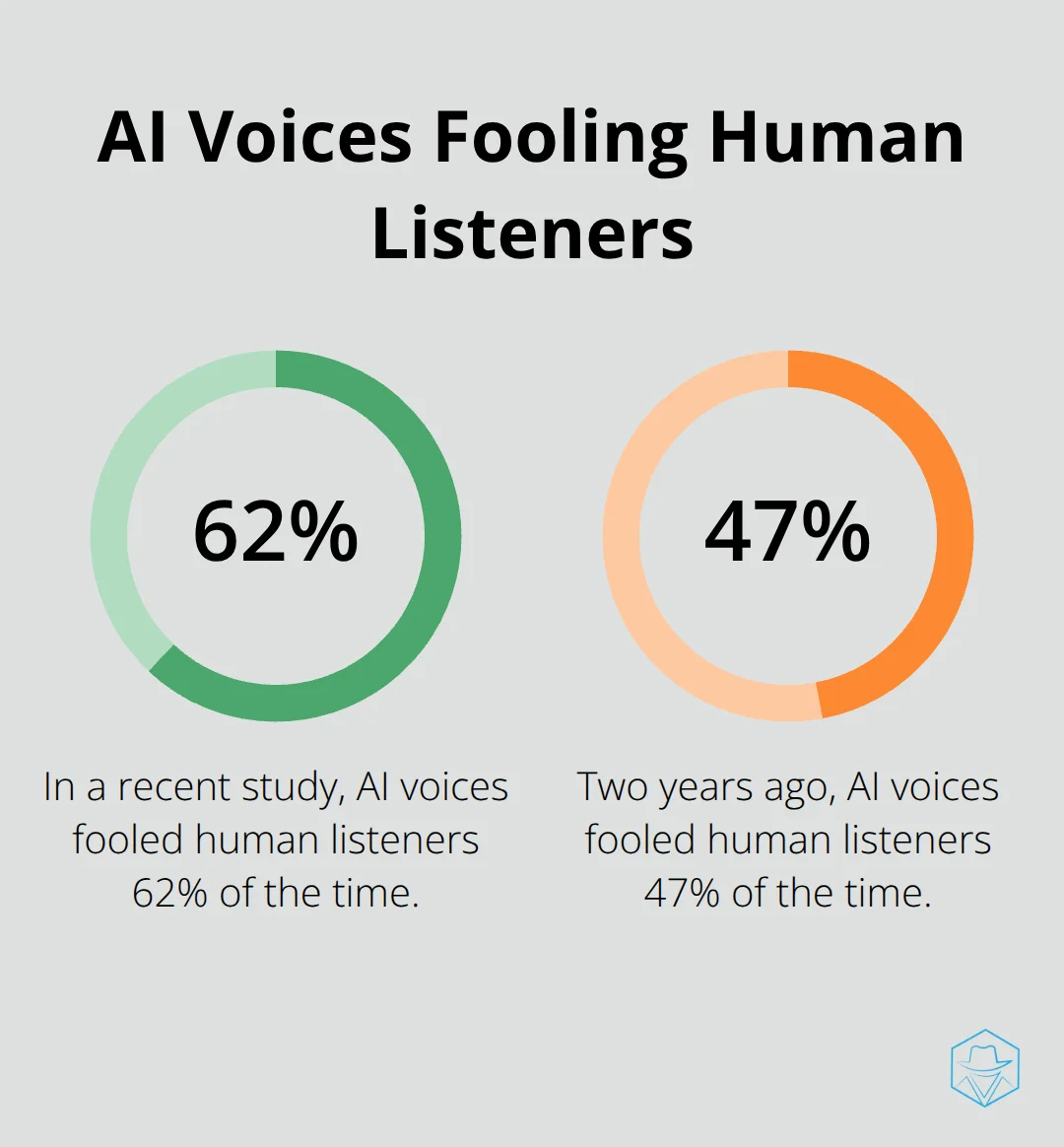

The gold standard for evaluating AI voice clones is a modified version of the Turing Test. In this test, human listeners hear both AI-generated and human voice samples, then try to distinguish between them. A recent study by the University of Edinburgh found that AI voices fooled listeners 62% of the time (up from 47% just two years ago).

To conduct your own informal Turing Test, you can use platforms like Amazon Mechanical Turk or Prolific to recruit participants. Present them with a mix of AI and human voice samples, then analyze the results to gauge the authenticity of your AI voice clone.

Acoustic Analysis Tools

Professional voice analysts use sophisticated software to compare AI-generated voices with their human counterparts. Praat, an open-source tool developed by the University of Amsterdam, allows for detailed analysis of pitch, formant frequencies, and spectrograms. For those who seek a more user-friendly option, Audacity offers basic spectrogram analysis that can reveal telltale signs of synthetic speech.

Real-World Success Stories

AI voice cloning has already made significant inroads in various industries. In 2024, a major audiobook publisher used AI to clone the voice of a popular narrator who had lost their voice due to illness. The resulting audiobook received critical acclaim, with most listeners unable to distinguish between the original narration and the AI-generated portions.

The film industry has also embraced this technology. James Earl Jones’s iconic Darth Vader voice was recreated using AI for recent Star Wars productions, allowing the character to live on even as the actor retired from the role.

Overcoming Common Challenges

Despite rapid advancements, AI voice cloning still faces challenges. One major hurdle is maintaining consistency across long-form content. AI voices can sometimes exhibit subtle shifts in tone or pacing that human listeners may find jarring. To address this, developers implement advanced neural networks that analyze entire paragraphs or scripts to maintain consistent delivery.

Another area for improvement is the handling of non-verbal vocalizations like laughs, sighs, or hesitations. These subtle cues play a crucial role in natural speech but are often overlooked in AI voice models. Companies like Sonantic make strides in this area, developing AI that can replicate these nuanced vocal behaviors.

Emotional conveyance remains a complex challenge. While AI can mimic basic emotions, it struggles with more nuanced feelings or rapid emotional shifts. Ongoing research at MIT’s Media Lab focuses on developing models that can better understand and replicate the full spectrum of human emotional expression in speech.

Voice synthesis has come a long way since the 1980s, with today’s AI-generated voices being nearly indistinguishable from human speech. This technological leap has opened up new possibilities for personalized voice marketing, allowing businesses to create highly accurate replicas of human voices for various applications.

Final Thoughts

AI voice cloning technology has advanced significantly, creating synthetic voices that often match human speech in authenticity. This progress opens new possibilities across industries, from entertainment to customer service. The future promises further refinements in voice authenticity, including improved emotional expression and real-time adaptability.

Ethical considerations remain paramount as AI voice technology evolves. Industry leaders must address issues of consent, privacy, and potential misuse to ensure responsible innovation. The impact of AI voice cloning extends to various sectors, revolutionizing content creation, marketing, and accessibility services.

Drop Cowboy’s Mimic AI™ technology enables businesses to create personalized voice messages at scale, enhancing communication effectiveness. As we explore the potential of AI voices, we must balance excitement with responsibility (ensuring these advancements benefit society as a whole). The future of voice technology holds immense promise, with applications ranging from personalized virtual assistants to groundbreaking healthcare solutions.

blog-dropcowboy-com

Related posts

July 20, 2025

TextMagic Review: Is It Worth Your Investment?

Explore TextMagic review to see if it’s a worthy investment. Discover its features, costs, and benefits to make an informed decision for your business.

April 14, 2025

What is Triple Whale and How Can It Boost Your Business?

Learn what Triple Whale is and how it boosts business growth with data analytics and insights, driving sales and increasing efficiency.

June 10, 2025

Google CRM System: Integrate with Your Workspace

Explore how the Google CRM system integrates seamlessly with Workspace to boost productivity and streamline your business operations effectively.

May 6, 2025

Zendesk vs HubSpot: Customer Service Software Showdown

Explore the Zendesk vs HubSpot showdown to find the best customer service software for your business needs and enhance your support efficiency.

May 27, 2025

Redtail CRM: Streamline Your Financial Advisory Practice

Streamline your financial advisory practice with Redtail CRM and boost efficiency, client management, and team collaboration effectively.

August 11, 2025

Ringless voicemail software

Explore how ringless voicemail software can streamline communication, boost efficiency, and keep your business ahead in customer engagement.